What Does ChatGPT Mean for Benefits Communications?

Unless you’ve been offline on a remote island for the past 6 months, chances are you’ve been inundated with news, hype, and hot takes on the latest craze: ChatGPT. While my inbox and Twitter feed have been overflowing with articles and blogs on the topic, this blog post addresses the impact of ChatGPT on HR benefits communications. Don’t get me wrong, the potential disruption in other areas of HR, like talent acquisition, business process automation, performance management, etc., are all worthy of ink too.

Some Quick Background

Generative AI is a type of artificial intelligence (AI) that creates new content, including text, images, audio, and video. In September 2022, San Francisco startup OpenAI released DALL-E 2, an AI system that can create realistic images and art from common, conversational language prompts. Generative AI systems have learned a lesson from AI systems like Siri and Alexa by making it easy to interact with them. No special commands, code words, or programming are required; just talk or type as you normally would, and, like magic, you get results.

In November 2022, OpenAI introduced the chatbot ChatGPT. Although chatbots are not new, this technology has the potential to be both more useful and more disruptive than earlier versions.

Proceed with Caution

Warning: The ability of AI chatbots to provide reliable information is only as good as the material the tools are trained on, and, as with any AI, there are always concerns around bias used to create the results. ChatGPT was trained on an impressive 45 terabytes of information from sources such as Wikipedia and web page data up through September 2021.

It is hard to get your head around how much information can be stored in 45 terabytes. According to ChatGPT, it would take more than 1.5 million 3-ring binders to hold the printed text equivalent of 45 terabytes of data.

ChatGPT has continued to evolve since it was introduced. Now, ChatGPT Plus, a subscription plan, has access to the internet, in addition to the information it was initially trained on. Still, although ChatGPT continues to improve daily, relying on content generated by an AI chatbot without fact-checking the results can lead to wrong answers and misinformation. ChatGPT’s own search page warns users that the chatbot “may occasionally generate incorrect information.”

Although this transformative technology offers much to be excited about, it also raises many areas of concern that must be acknowledged, addressed, and mitigated. Numerous privacy, legal, ethical, security, and bias concerns associated with AI have been documented already.

According to a 2022 McKinsey study, only 27% of the employees developing AI solutions identify as women, and only 25% identify as racial or ethnic minorities. According to Harvard Business Review, “If the team developing the tech is not inclusive, then neither is the tech.”

The Tsunami Is Coming

The genie is out of the bottle, and millions have seen exciting new possibilities that this new technology brings. Tech giants like Google and Microsoft are transforming their businesses to embrace it. Microsoft 365 Copilot will soon be embedded in Microsoft products like PowerPoint, Excel, Word, Outlook, and Teams, which will enable users to issue short conversational prompts to quickly produce presentations, documents, proposals, graphs, etc.

Another company, Grammarly, is introducing a ChatGPT-style AI tool to help with writing and editing. Gartner predicts that by 2025, 30% of outbound marketing messages from large organizations will be synthetically generated, up from less than 2% in 2022.

It’s a Tool, Not an Answer

People who know me well know that one of my constant refrains (and favorite phrases) is “the appropriate use of technology.” We should remember that ChatGPT is a tool that can be used for good or bad, and it is up to us (humans) to find creative, good ways to use it, while acknowledging its limitations.

In many ways, I think of ChatGPT as the next (very dramatic) evolution of spell check or search. Spell checking and internet searching are so ingrained in our workflows today that we take them for granted. Not sure how to spell something? Don’t worry; spell check will catch it. Don’t know an answer? Google it. While these tools are designed to help us be more efficient, they don’t replace human creativity, empathy, judgment, or accountability. One day soon, generative AI will be embedded in many of our workflows, and we will take it for granted too. We are just scratching the surface of its possibilities, and its impact will be profound.

How Generative AI Will Impact Benefits Communications

Generative AI has the potential to transform the benefits communications landscape in a number of ways. Here are 5 ways we see this playing out:

- Simplifying the complex and cumbersome

- Creating content

- Improving customer service

- Generating ideas and brainstorming

- Creating images

Simplifying the Complex and Cumbersome

Generative AI does a wonderful job of distilling content that it has been trained on. In the benefits space, think of all the complicated issues that we communicate to participants and their families: leaves of absence, retirement, health care, etc. Our industry is ripe for technology that easily simplifies complex subject matter. But beware! Generative AI will make up things that it does not know.

Example:

Jon to ChatGPT: Summarize the Affordable Care Act in 50 words or less.

ChatGPT: The Affordable Care Act, also known as Obamacare, is a healthcare law passed in 2010. It aims to provide more affordable healthcare options for Americans by expanding Medicaid, establishing health insurance exchanges, and implementing mandates for individuals and employers to have health insurance.

Note: The ACA summary is inaccurate, because the individual mandate was repealed. This is a good reminder that the tool is only as good as the data it is trained on and that it’s important to fact-check all results.

Kathryn Bakich, senior vice president and Health Compliance practice leader at Segal, warns against using tools like ChatGPT to simplify complex plan documents. “Plan documents and SPDs serve as an important legal basis to protect the plan sponsor and plan participants. AI may interpret provisions incorrectly or contrary to the intent of the plan sponsor. Consequently, AI would not be useful to summarize plan documents or employer policies, at least not without legal review of the result.”

Creating Content

Going forward, tools like ChatGPT will be helpful virtual assistants for content writers. Whether they’re used as aids to overcome writer’s block or to create a rough first draft, these tools will allow writers to spend more of their time being creative and editing copy. Adding human empathy, adjusting for tone of voice or brand, verifying accuracy of information, and providing point of view are all critical to successful benefits communications. And they’re things that AI can’t do easily and still need a human to own. Using generative AI to write content also opens up topics around plagiarism, citations and referencing information, ownership, and copyright issues.

Example:

Jon to ChatGPT: Explain how medical deductibles and coinsurance work to a 5th grader in 50 words or less.

ChatGPT: Medical deductibles are the amount you pay before insurance starts covering your medical expenses, while coinsurance is the percentage of medical expenses you pay after meeting the deductible.

Note: I tested this output with my 5th grade son, and his feedback was that it was “okay.” He gave it an 8 out of 10. He said it would have been better to also explain what “insurance” was.

Improving Customer Service

Many organizations struggle with communicating across generations. Over time, expectations and preferences change. Today, employees expect their employer’s benefit information to be easily accessible on the web. Tomorrow, employees will have higher expectations around interactive chatbots, AI-based Q&A tools, and personalized content that is relevant to them. Part of generative AI’s draw is that its human-like conversational style makes you feel as if you’re talking to a real person. As people use generative AI in their daily lives, those same expectations are likely to spill over to benefits communications as well.

Generating Ideas and Brainstorming

Stuck for ideas? Although AI shouldn’t be used in place of brainstorming with a diverse group of people, it can help get ideas flowing, and it might offer ideas that no one in your group could come up with on their own. The promise is that it is quick and easy to do, and it can help you get unstuck.

Example:

Jon to ChatGPT: Brainstorm 10 different names you could use instead of “high-deductible health plan.”

ChatGPT:

- Basic Health Plan

- Entry-Level Health Plan

- Starter Health Plan

- Budget Health Plan

- Essential Health Plan

- Core Health Plan

- Minimalist Health Plan

- Thrifty Health Plan

- Cost-Saving Health Plan

- Economy Health Plan

Creating Images

While the image creator technology is not nearly good enough today, the improvements promised in the generative digital images and video fields are worth noting. Today, one limitation of using stock photography is finding authentic photos that will resonate with targeted audiences. It’s tempting to think that AI image generators will enable designers to craft images to exact specifications, but this also introduces more questions and considerations.

For example, after Levi Strauss & Co. recently announced they would be using AI-generated models to increase diversity, they got a lot of negative pushback. This begs the questions, “Is using AI-generated models more or less authentic than using stock photography?” and “What happens when the technology gets good enough that no one can tell the difference?”

As this area continues to evolve, it will be important to keep tabs on the cost, licensing, and legality of AI-generated images, because these subjects haven’t been fully addressed to date. Shutterstock recently announced a partnership to legally license AI images, but only time will tell how this shakes out. How the AI generators were trained and who gets paid are among the current questions.

Example:

Jon to AI image generator: photo of a 45-year-old electrician on a worksite wearing proper safety equipment.

AI image generator:

AI-generated image of an electrician wearing safety equipment.

The Answer Is Not “No,” but “What and How”

When I started my professional career back in 1996, my employer at the time blocked employees’ access to the internet. Back then, it was a relatively new technology with lots of unknowns. Thankfully, that policy was revisited shortly thereafter.

As with other revolutionary tools, HR must anticipate how this new technology will change work and expectations.

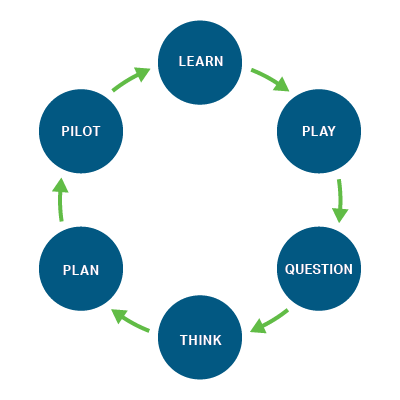

Generative AI is very early in its lifecycle, and things are changing daily. Don’t be overwhelmed by all the buzz and noise. Instead, think of the possibilities and how liberating these tools can be when they perform tedious, time-consuming tasks that free us up to think more critically and creatively. As you explore how to integrate generative AI into your work, consider this framework to guide your testing process:

Diagram of the iterative process for incorporating AI into your work: learn, play, question, think, plan, pilot, learn.

It’s easy to get caught up in brainstorming the possibilities of this amazing technology. But it is more important to establish guidelines and rules to ensure that the way the technology is used, and its output, meet your organization’s ethical, security, privacy, and business standards.

Joey Allen, senior consultant at Segal Benz, summarizes it well: “Thinking deeply about the process for integrating generative AI into our work requires human involvement and oversight. And reviewing how to use it can also help folks who are not as comfortable with technology produce more meaningful and accurate results with ChatGPT.”

Expect rapid changes in the field to continue. The technology will most surely transform the way we work. But remember that, as a tool with limitations and blind spots, ChatGPT—and other generative AI tools—should be used mindfully, with common sense. If you trust it blindly, you might just end up like Michael Scott from “The Office” when the GPS told him to drive into a lake. Don’t get wet.

We’re proud to work with organizations that value their people. If you want to learn more, we’d love to talk.

Read Next

Work with Us

We partner with organizations that value their people first. Let’s talk.

Jon Stuckey, VP Creative Technology and Innovation, provides strategic vision, guidance, and solutions for our largest clients.